4.5.0

Crunchy Data announces the release of the PostgreSQL Operator 4.5.0 on October 2, 2020.

The PostgreSQL Operator is released in conjunction with the Crunchy Container Suite.

The PostgreSQL Operator 4.5.0 release includes the following software versions upgrades:

- Add support for PostgreSQL 13.

- pgBackRest is now at version 2.29.

- postgres_exporter is now at version 0.8.0

- pgMonitor support is now at 4.4

- pgnodemx is now at version 1.0.1

- wal2json is now at version 2.3

- Patroni is now at version 2.0.0

Additionally, PostgreSQL Operator 4.5.0 introduces support for the CentOS 8 and UBI 8 base container images. In addition to using the newer operating systems, this enables support for TLS 1.3 when connecting to PostgreSQL. This release also moves to building the containers using Buildah 1.14.9.

The monitoring stack for the PostgreSQL Operator has shifted to use upstream components as opposed to repackaging them. These are specified as part of the PostgreSQL Operator Installer. We have tested this release with the following versions of each component:

- Prometheus: 2.20.0

- Grafana: 6.7.4

- Alertmanager: 0.21.0

PostgreSQL Operator is tested with Kubernetes 1.15 - 1.19, OpenShift 3.11+, OpenShift 4.4+, Google Kubernetes Engine (GKE), Amazon EKS, and VMware Enterprise PKS 1.3+.

Major Features

PostgreSQL Operator Monitoring

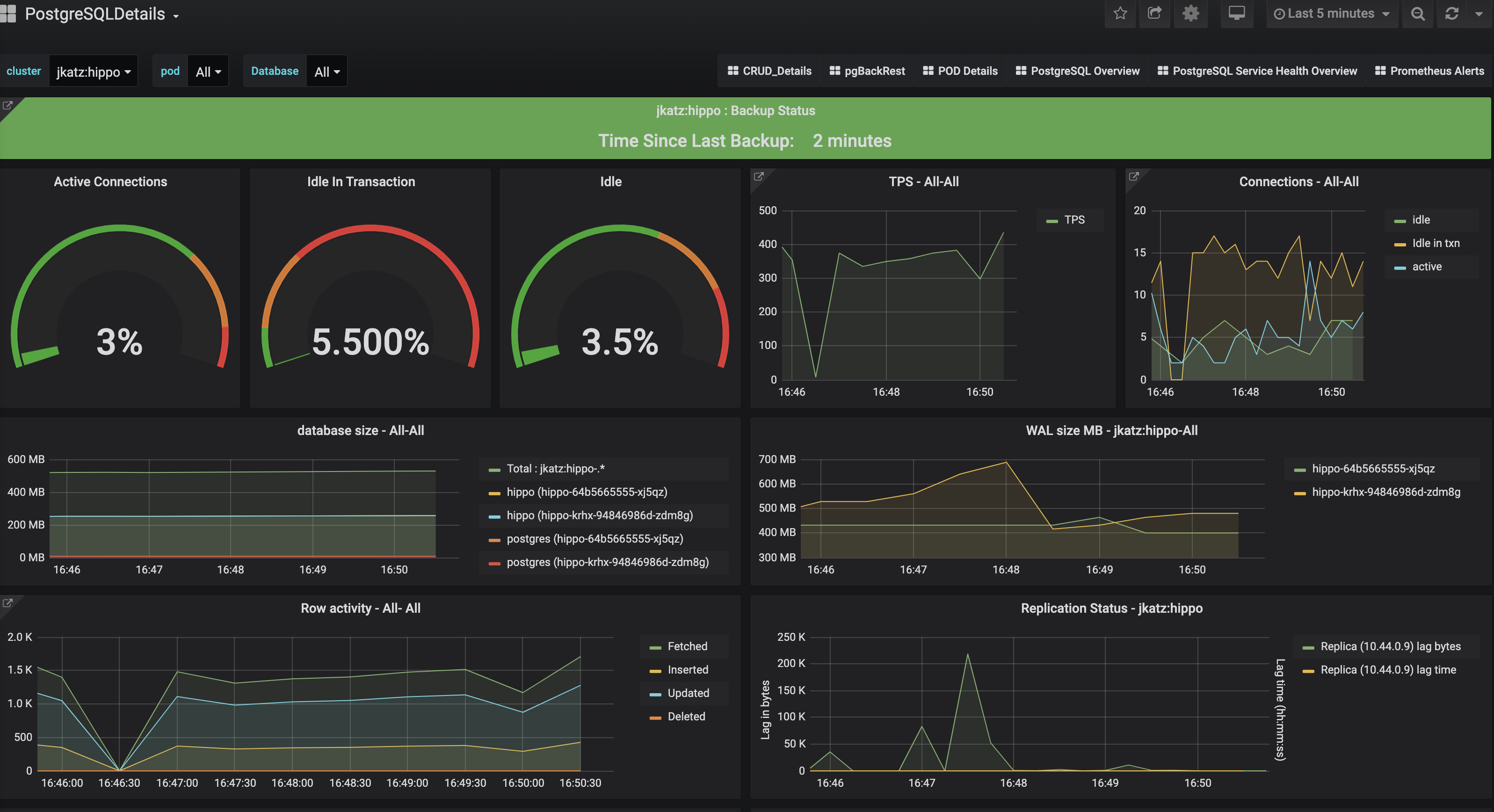

This release makes several changes to the PostgreSQL Operator Monitoring solution, notably making it much easier to set up a turnkey PostgreSQL monitoring solution with the PostgreSQL Operator using the open source pgMonitor project.

pgMonitor combines insightful queries for PostgreSQL with several proven tools for statistics collection, data visualization, and alerting to allow one to deploy a turnkey monitoring solution for PostgreSQL. The pgMonitor 4.4 release added support for Kubernetes environments, particularly with the pgnodemx that allows one to get host-like information from the Kubernetes Pod a PostgreSQL instance is deployed within.

PostgreSQL Operator 4.5 integrates with pgMonitor to take advantage of its Kubernetes support, and provides the following visualized metrics out-of-the-box:

- Pod metrics (CPU, Memory, Disk activity)

- PostgreSQL utilization (Database activity, database size, WAL size, replication lag)

- Backup information, including last backup and backup size

- Network utilization (traffic, saturation, latency)

- Alerts (uptime et al.)

More metrics and visualizations will be added in future releases. You can further customize these to meet the needs for your enviornment.

PostgreSQL Operator 4.5 uses the upstream packages for Prometheus, Grafana, and Alertmanager. Those using earlier versions of monitoring provided with the PostgreSQL Operator will need to switch to those packages. The tested versions of these packages for PostgreSQL Operator 4.5 include:

- Prometheus (2.20.0)

- Grafana (6.7.4)

- Alertmanager (0.21.0)

You can find out how to install PostgreSQL Operator Monitoring in the installation section:

https://access.crunchydata.com/documentation/postgres-operator/latest/latest/installation/metrics/

Customizing pgBackRest via ConfigMap

pgBackRest powers the disaster recovery capabilities of PostgreSQL clusters deployed by the PostgreSQL Operator. While the PostgreSQL Operator provides many toggles to customize a pgBackRest configuration, it can be easier to do so directly using the pgBackRest configuration file format.

This release adds the ability to specify the pgBackRest configuration from either a ConfigMap or Secret by using the pgo create cluster --pgbackrest-custom-config flag, or by setting the BackrestConfig attributes in the pgcluster CRD. Setting this allows any pgBackRest resource (Pod, Job etc.) to leverage this custom configuration.

Note that some settings will be overriden by the PostgreSQL Operator regardless of the settings of a customized pgBackRest configuration file due to the nature of how the PostgreSQL instances managed by the Operator access pgBackRest. However, these are typically not the settings that one wants to customize.

Apply Custom Annotations to Managed Deployments

It is now possible to add custom annotations to the Deployments that the PostgreSQL Operator manages. These include:

- PostgreSQL instances

- pgBackRest repositories

- pgBouncer instances

Annotations are applied on a per-cluster basis, and can be set either for all the managed Deployments within a cluster or individual Deployment groups. The annotations can be set as part of the Annotations section of the pgcluster specification.

This also introduces several flags to the pgo client that help with the management of the annotations. These flags are available on pgo create cluster and pgo update cluster commands and include:

--annotation- apply annotations on all managed Deployments--annotation-postgres- applies annotations on all managed PostgreSQL Deployments--annotation-pgbackrest- applies annotations on all managed pgBackRest Deployments--annotation-pgbouncer- applies annotations on all managed pgBouncer Deployments

These flags work similarly to how one manages annotations and labels from kubectl. To add an annotation, one follows the format:

--annotation=key=value

To remove an annotation, one follows the format:

--annotation=key-

Breaking Changes

- The

crunchy-collectcontainer, used for metrics collection is renamed tocrunchy-postgres-exporter - The

backrest-restore-<fromClusterName>-to-<toPVC>pgtask has been renamed tobackrest-restore-<clusterName>. Additionally, the following parameters no longer need to be specified for the pgtask:- pgbackrest-stanza

- pgbackrest-db-path

- pgbackrest-repo-path

- pgbackrest-repo-host

- backrest-s3-verify-tls

- When a restore job completes, it now emits the message

restored Primary createdinstead ofrestored PVC created. - The

toPVCparameter has been removed from the restore request endpoint. - Restore jobs using

pg_restoreno longer havefrom-<pvcName>in their names. - The

pgo-backrest-restorecontainer has been retired. - The

pgo loadcommand has been removed. This also retires thepgo-loadcontainer. - The

crunchy-prometheusandcrunchy-grafanacontainers are now removed. Please use the corresponding upstream containers.

Features

- The metrics collection container now has configurable resources. This can be set as part of the custom resource workflow as well as from the

pgoclient when using the following command-line arguments:- CPU resource requests:

pgo create cluster --exporter-cpupgo update cluster --exporter-cpu- CPU resource limits:

pgo create cluster --exporter-cpu-limitpgo update cluster --exporter-cpu-limit- Memory resource requests:

pgo create cluster --exporter-memorypgo update cluster --exporter-memory- Memory resource limits:

pgo create cluster --exporter-memory-limitpgo update cluster --exporter-memory-limit

- Support for TLS 1.3 connections to PostgreSQL when using the UBI 8 and CentOS 8 containers

- Added support for the

pgnodemxextension which makes container-level metrics (CPU, memory, storage utilization) available via a PostgreSQL-based interface.

Changes

- The PostgreSQL Operator now supports the default storage class that is available within a Kubernetes cluster. The installers are updated to use the default storage class by default.

- The

pgo restoremethodology is changed to mirror the approach taken bypgo create cluster --restore-fromthat was introduced in the previous release. Whilepgo restorewill still perform a “restore in-place”, it will now take the following actions:- Any existing persistent volume claims (PVCs) in a cluster removed.

- New PVCs are initialized and the data from the PostgreSQL cluster is restored based on the parameters specified in

pgo restore. - Any customizations for the cluster (e.g. custom PostgreSQL configuration) will be available.

- This also fixes several bugs that were reported with the

pgo restorefunctionality, some of which are captured further down in these release notes.

- Connections to pgBouncer can now be passed along to the default

postgresdatabase. If you have a pre-existing pgBouncer Deployment, the most convenient way to access this functionality is to redeploy pgBouncer for that PostgreSQL cluster (pgo delete pgbouncer+pgo create pgbouncer). Suggested by (@lgarcia11). - The Downward API is now available to PostgreSQL instances.

- The pgBouncer

pgbouncer.iniandpg_hba.confhave been moved from the pgBouncer Secret to a ConfigMap whose name follows the pattern<clusterName>-pgbouncer-cm. These are mounted as part of a project volume in conjunction with the current pgBouncer Secret. - The

pgo dfcommand will round values over 1000 up to the next unit type, e.g.1GiBinstead of1024MiB.

Fixes

- Ensure that if a PostgreSQL cluster is recreated from a PVC with existing data that it will apply any custom PostgreSQL configuration settings that are specified.

- Fixed issues with PostgreSQL replica Pods not becoming ready after running

pgo restore. This fix is a result of the change in methodology for how a restore occurs. - The

pgo scaledownnow allows for the removal of replicas that are not actively running. - The

pgo scaledown --querycommand now shows replicas that may not be in an active state. - The pgBackRest URI style defaults to

hostif it is not set. - pgBackRest commands can now be executed even if there are multiple pgBackRest Pods available in a Deployment, so long as there is only one “running” pgBackRest Pod. Reported by Rubin Simons (@rubin55).

- Ensure pgBackRest S3 Secrets can be upgraded from PostgreSQL Operator 4.3.

- Ensure pgBouncer Port is derived from the cluster’s port, not the Operator configuration defaults.

- External WAL PVCs are only removed for the replica they are targeted for on a scaledown. Reported by (@dakine1111).

- When deleting a cluster with the

--keep-backupsflag, ensure that backups that were created via--backup-type=pgdumpare retained. - Return an error if a cluster is not found when using

pgo dfinstead of timing out. - pgBadger now has a default memory limit of 64Mi, which should help avoid a visit from the OOM killer.

- The Postgres Exporter now works if it is deployed in a TLS-only environment, i.e. the

--tls-onlyflag is set. Reported by (@shuhanfan). - Fix

pgo labelwhen applying multiple labels at once. - Fix

pgo create pgoroleso that the expression--permissions=*works. - The

operatorcontainer will no longer panic if all Deployments are scaled to0without using thepgo update cluster <mycluster> --shutdowncommand.